Data integration tools are key software that combine data from various sources into one clear picture. These tools make it easy to gather, change, and merge data from different systems, databases, and apps. By automating these processes, companies can make sure their data is correct and fresh, while also boosting overall data quality. From connecting different data sources to keeping data the same across platforms, these tools help businesses make smarter choices quicker and more effectively. They have an impact on how firms use their data to grow and succeed.

According to recent studies, companies putting money into these platforms see a 23% boost in productivity because data is easier to access and more automated. Also, the global market for data solutions is set to grow at a CAGR (Compound Annual Growth Rate) of 11.4% pushed by the need for cloud-based services and to handle huge sets of data. These results show how crucial it is for businesses to handle data in real-time and streamline workflows if they want to get the most out of their operations.

Picking the right software for your business can be tricky. Small startups and big companies have different needs. The best choice depends on how big your company is, how complex your data is, and what you need the software to do. In this guide, we’ll look at some of the top platforms for 2024. We’ve grouped them by business size and tech needs to help you decide what’s best for you.

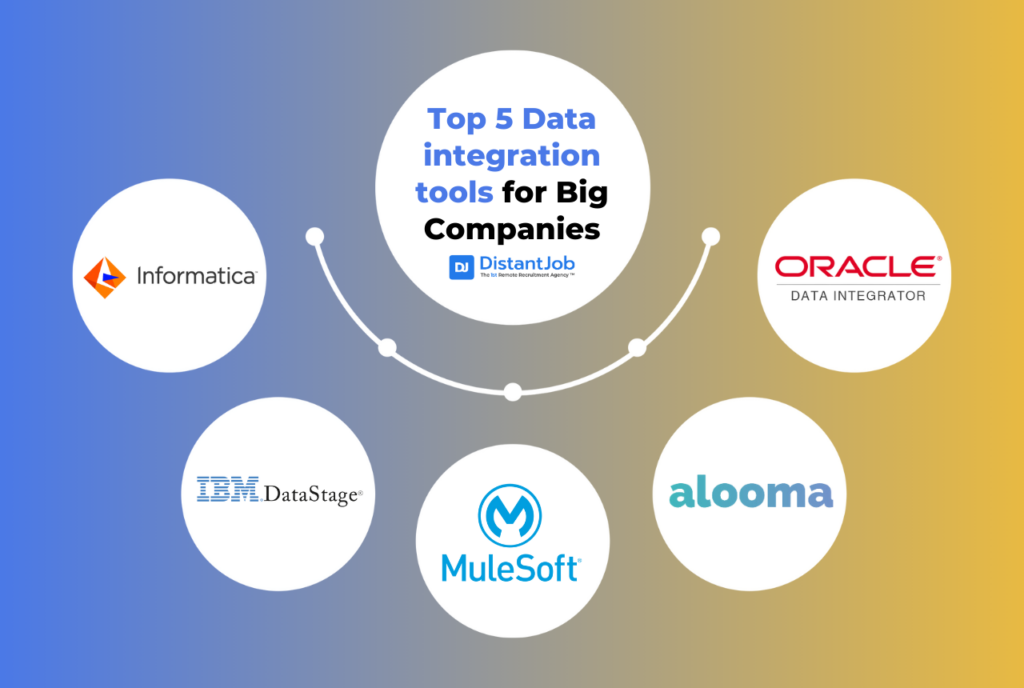

Top Data Integration Tools for Big Companies

Large businesses need data integration tools that can grow and handle complex data. These tools can deal with lots of data, follow rules, and connect different systems.e mand

Here are 5 you might want to look at:

1. MuleSoft Anypoint Platform

MuleSoft Anypoint Platform offers a complete answer for API management and integration. It links applications, information, and hardware across cloud and on-site systems backing real-time and batch processing. Its API management tools enable secure central control making it a top pick for big business API integration.

MuleSoft Anypoint Platform Pros:

- Complete API Management: Manages the entire API lifecycle covering creation, testing, deployment, and tracking.

- Mixed Integration: Works with both cloud and in-house systems giving users freedom to choose their setup.

- Adaptable: The system bends to fit different business requirements letting users design their own workflows and integration routes.

- Safe and Compliant: Comes with built-in safety measures like OAuth and data coding meeting strict regulatory rules.

- Can Grow: Handles big data sets well making it a good fit for large companies with lots of API traffic.

MuleSoft Anypoint Platform Cons:

- High Costs: Prices depend on API calls and can get pricey as use grows.

- Complex Setup: The tool needs a lot of tech know-how to set up, blend in, and run over time.

- Steep Learning Curve: The platform packs a punch but is tricky making it tough for teams without much tech muscle.

Best For: Big companies that need to handle complex API tasks.

2. Informatica PowerCenter

Informatica PowerCenter is a top-tier ETL platform for big businesses. It shines when it comes to joining different data sources and apps. It offers full data management answers covering data quality, control, and metadata handling.

Informatica PowerCenter Pros:

- High Scalability and Performance: Has the ability to handle huge amounts of data and work well under heavy loads, which makes it perfect for big business projects.

- Extensive Data Connectivity: Allows connections across many different systems, including cloud platforms, databases, big data systems, and more.

- Strong Metadata Management: Helps to track where data comes from and how changes affect it, to make sure data is good quality and easy to trace.

- Enhanced Data Security: Includes user-based access control, encryption, and other safety measures to meet industry rules like GDPR and HIPAA.

- Data Quality Management: Provides tools to clean and check data to ensure accurate top-notch datasets.

Informatica PowerCenter Cons:

- Costly Licenses: PowerCenter charges steep fees for its licenses, which often puts it out of reach for medium-sized companies or smaller businesses.

- Tricky to Set Up: Users face a tough time learning the platform, and it needs dedicated tech teams to run and keep it going.

- Demands a Lot of Resources: Given its features, it needs robust infrastructure when crunching big datasets, which might mean buying extra hardware.

Best For: Integrating data on a big scale across many systems and datasets.

3. IBM InfoSphere DataStage

IBM InfoSphere DataStage stands out as a powerful tool to integrate data. It shines at processing tasks in parallel and handling big data management jobs. Its design suits real-time high-volume data integration projects.

IBM InfoSphere DataStage Pros:

- Parallel Processing: DataStage has an impact on speeding up big integration projects by processing data at the same time. This makes it a good fit for big companies that need to handle lots of data .

- Support for Complex Data Workflows: Deals with tricky ETL jobs, from getting data from many places to changing it and putting it into target systems.

- Comprehensive Data Governance: Works with IBM’s InfoSphere suite to ensure strong data control and safety helping to protect data privacy and follow rules.

- Real-Time Data Integration: Can handle real-time and batch integration tasks, which helps to make high-speed data workflows work better.

IBM InfoSphere DataStage Cons:

- Expensive: As a tool for big businesses, it costs a lot, which can make it hard for smaller companies to afford.

- Hard to Learn: You need a lot of tech know-how to set up and get the most out of DataStage if you’re new to processing data in parallel.

- Tricky to Set Up: Getting it running and keeping it going can take a lot of work needing a strong IT setup and skilled people.

Best For: Big companies dealing with huge amounts of data and time-sensitive operations.

4. Oracle Data Integrator (ODI)

Oracle Data Integrator (ODI) uses an E-LT setup made to move and process data in Oracle systems. It’s good at loading, changing, and cleaning up data across different systems.

Oracle Data Integrator (ODI) Pros:

- E-LT Architecture: Has an impact on reducing overhead by using target database power, making data transformations quicker and using fewer resources.

- Extensive Integration with Oracle Ecosystem: ODI works with Oracle databases and applications boosting performance in settings with lots of Oracle products.

- High Flexibility: Supports many kinds of data sources and targets, both structured and unstructured allowing it to adapt to different needs.

- Data Quality and Governance: Offers strong oversight and tracking features to make sure data stays accurate and intact across workflows.

Oracle Data Integrator (ODI) Cons:

- Oracle-Centric: ODI shines in Oracle setups, but its appeal drops in non-Oracle systems, which limits options to organizations that use other platforms.

- High Cost: The license fees are steep when you add support and upkeep costs.

- Technical Know-How Needed: To set up and run ODI, you need to know Oracle tech inside out, which makes it tough to use teams that aren’t familiar with Oracle.

Best For: Oracle-based companies that need top-notch data integration.

5. Alooma

Alooma serves as a platform for integrating data in real time built for companies using Google Cloud. It stands out in its ability to stream data making it a top pick for businesses that need quick insights from their data channels.

Alooma Pros:

- Great for Real-Time Analytics: Alooma has an ability to stream in real-time. This makes it ideal for companies that need instant insights to run their operations and make decisions.

- Adapts to Business Growth: Alooma keeps up with increasing data needs as companies expand, without slowing down.

- Makes Complex Data Flows Easier: By bringing together tricky data processes into one view, it saves time and boosts productivity.

- Moves Data : This tool helps transfer data between different systems on its own, cutting down on manual work.

Alooma Cons:

- Limited to Google Cloud: It works well within Google’s system, but its usefulness drops a lot outside of it.

- Cost: The platform can get expensive as you use more data and stream more often.

- Not Very Flexible: Compared to other tools that offer more options, Alooma focuses on Google Cloud. This makes it less appealing to businesses that use many different platforms.

Best For: Companies that use Google Cloud and need strong real-time analytics and data streaming features.

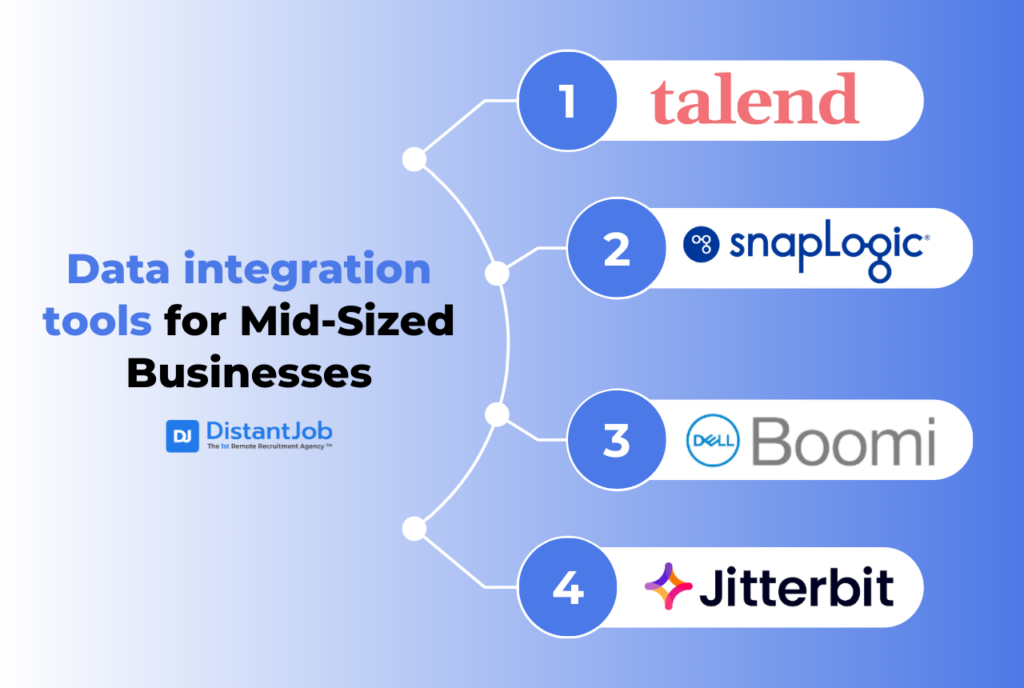

Top Picks for Mid-Sized Businesses

Medium-sized firms often need adaptable answers that strike a balance between capability and simplicity. These tools offer extensive features while being expandable and simple to use for expanding businesses.

Here are 4 options to think about:

1. Talend

Talend provides a complete data integration platform that centers on ETL (Extract, Transform Load), data quality, and API services. It works with cloud, on-site, and mixed settings making it useful for different business requirements.

Talend Pros:

- Saves Money: Talend’s open-source model gives mid-sized companies with complex integration needs a budget-friendly option.

- Grows with You: It can handle more data as companies expand.

- Ready-Made Connections: Comes with many connectors to link different systems.

- Works in Cloud and Mixed Settings: Great for businesses switching to cloud or mixed models.

Talend Cons:

- Slows Down with Huge Data: The free version might struggle when dealing with massive datasets.

- Tricky for Advanced Tasks: You still need to code for complex workflows making it hard for non-tech folks to use.

Best For: Medium-sized companies that need a tool to integrate systems that can grow with them and be tailored to their needs all without costing a fortune.

2. SnapLogic

SnapLogic offers a cloud-based tool to integrate data and applications. Users can set up automated workflows by dragging and dropping elements eliminating the need to write code. This makes it a great choice for medium-sized businesses looking for quick easy-to-use solutions.

SnapLogic Pros:

- User-Friendly Interface: You can create workflows by dragging and dropping elements. This means you don’t need to know a lot about coding.

- Wide Connector Library: You can link up with over 700 cloud apps, databases, and on-site systems.

- Real-Time Data Streaming: You get both real-time and batch processing. This helps businesses to gain up-to-date insights.

SnapLogic Cons:

- Cost Increases with Scale: You’ll pay more as your data grows and gets more complex.

- Limited Advanced Customization: While it’s easy to use, SnapLogic doesn’t offer many options to customize for businesses with complex needs.

Best For: Mid-sized companies that want simple scalable solutions with minimal coding and real-time integration.

3. Boomi (Dell Boomi)

Boomi is a cloud-native integration platform (iPaaS) that has an impact on powerful automation and data transformation. It aims to help businesses connect on-site and cloud applications .

Boomi Pros:

- Low-Code Automation: Makes integration easier with little coding allowing companies to put integrations into action fast.

- Flexible and Scalable: Boomi’s cloud-native structure lets businesses grow as data and integration needs increase.

- Wide Range of Pre-Built Connectors: Big connector library to integrate applications .

Boomi Cons:

- Costs Rise with Growth: Boomi’s pricing model gets more expensive as companies need more data.

- Limited Customization: Some tricky workflows might need extra coding beyond what the platform offers.

Best For: Medium-sized businesses that want quick easy-to-use integration for cloud and local systems.

4. Jitterbit

Jitterbit offers a versatile way to link data, APIs, and apps with a strong but easy-to-use interface. It’s a solid choice for medium-sized companies aiming to streamline workflows and connect different systems .

Jitterbit Pros:

- Quick Integration Setup: Jitterbit provides ready-made templates to get integrations up and running fast.

- Flexible Deployment: Supports integrations both on-site and in the cloud making it adaptable for expanding businesses.

- Easy to Use: The interface is straightforward letting companies set up integrations without needing deep tech know-how.

Jitterbit Cons:

- Limited Customization: Like other low-code platforms complex scenarios might need custom coding.

- Costs Can Add Up: Expenses can increase as you add more endpoints and data flows.

Best For: Medium-sized businesses looking for an easy-to-use platform for mixed integrations that can keep up with their growth.

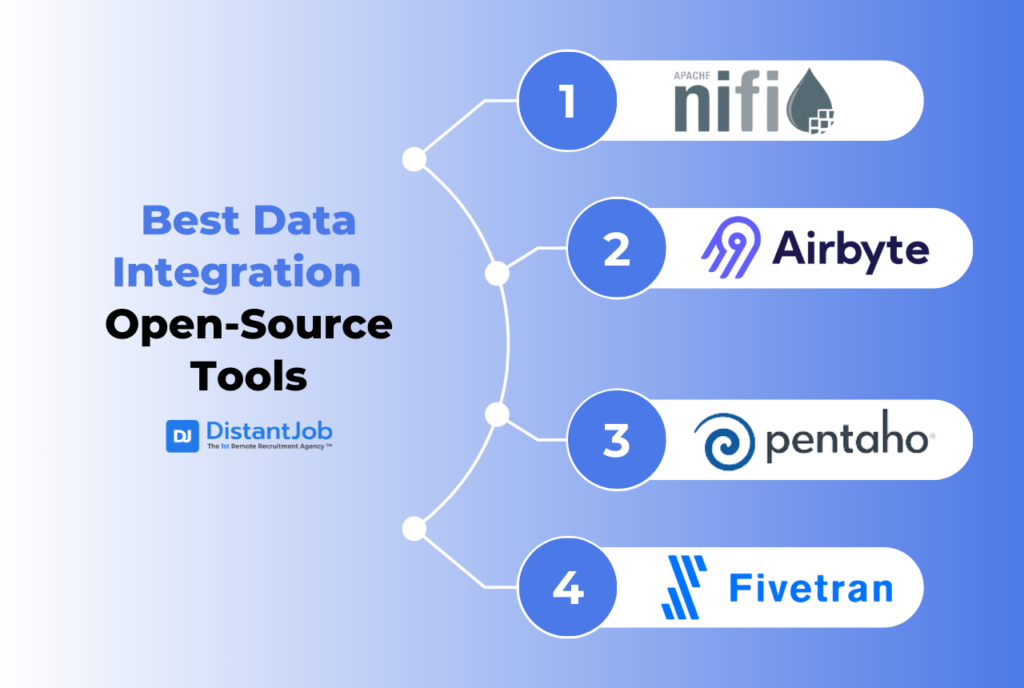

Best Open-Source Tools

Open-source tools give users flexibility, affordable options, and the ability to customize. This makes them a good choice for companies that want solutions they can adapt.

Here are 4 open-source tools to integrate data:

1. Apache NiFi

Apache NiFi is an open-source platform that automates how data flows between systems. It lets users ingest, transform, and distribute data in real time. This makes it key for organizations that need to move big datasets quickly and reliably.

Apache NiFi Pros:

- Real-Time Data Flow Automation: Makes data streaming happen between different systems right away.

- Scalable: Built to work with huge amounts of data, great for big data setups.

- Flexible Architecture: Can change to fit different needs and work processes.

Apache NiFi Cons:

- Technical Know-How Required: Needs a capable team to set up and keep running.

- Performance Slowdowns: Might slow things down if not set up right for specific uses.

Best For: Companies that want scalable instant data integration with strong automation features dealing with big data.

2. Airbyte

Airbyte is an open-source tool to integrate data. It focuses on ETL processes helping companies to build custom data pipelines without much effort. Companies that want to keep control of their data integration processes find it useful because it’s flexible and the community keeps improving it.

Airbyte Pros:

- Integrations You Can Customize: It works with many data sources, so you can adapt it to fit your needs.

- Regular Updates: The active community keeps adding new connectors and making improvements.

- Saves Money: The main platform is free, which makes it great for new and small companies.

Airbyte Cons:

- Lacks Advanced Features: The current version of the platform is still in development, and it doesn’t have some high-end features.

- Still Growing: Airbyte is pretty new so not all connectors or features work yet.

Best For: Small to medium-sized businesses looking for an open-source option that allows them to build custom integrations .

3. Pentaho

Pentaho is a powerful open-source tool for data integration known for its ETL abilities. It can handle complex data changes and integrations, which makes it easy to customize for advanced users.

Pentaho Pros:

- Full-scale Data Management: Supports data warehousing, helps with migration, and offers advanced analytics.

- Adaptable: Perfect for companies that need to customize their integration processes.

- Data Visualization: Built-in reporting tools make data interpretation simpler.

Pentaho Cons:

- Tough to Master: Teams without technical skills might struggle to use its wide range of features.

- Demands Resources: Needs a lot of IT infrastructure and skilled technical experts.

Best For: Skilled users who want a flexible solution to handle complex data transformation and integration jobs.

4. Fivetran

Fivetran offers a cloud-based ETL platform that has an impact on data pipeline creation by automating it. Companies that want quick dependable data integration with little manual input find it suitable.

Fivetran Pros:

- Automated Pipelines: The system syncs data needing minimal upkeep.

- Pre-Built Connectors: It supports more than 160 data connectors making it simple to link with different platforms.

- Quick Setup: It’s easy to implement allowing teams to spend more time analyzing data instead of integrating it.

Fivetran Cons:

- Costly at Scale: The pricing depends on data volume, which can get expensive as data needs increase.

- Limited Customization: The automation makes it harder to put complex custom workflows into action.

Best For: Businesses looking for quick straightforward ETL processes that don’t need much tweaking or constant oversight.

Top Picks for Small Businesses and Startups

Small businesses and startups need cheap and simple data integration tools that can grow as their business grows.

Here are three tools that offer flexibility and the ability to scale without being too complex:

1. Tray.io

Tray.io is a platform that doesn’t need much coding to automate integrations across different apps. Its easy-to-use interface makes it good for small teams allowing them to automate workflows without needing advanced coding skills.

Tray.io Pros:

- Low-Code Automation: Users can build complex workflows with little coding. This suits teams that lack deep IT knowledge.

- Cost-Effective: The pricing plans don’t break the bank making them a good fit for new companies and small firms.

- User-Friendly Interface: It’s easy to use and set up so teams can get data pipelines running .

Tray.io Cons:

- Limited Scalability for Complex Processes: While it works well for small tasks, it might have trouble keeping up with more complex workflows.

- Less Advanced Features: It doesn’t have the deep functions that businesses with big integration needs require.

Best For: Small companies that want simple cheap automation tools without needing their own IT staff.

2. Hevo Data

Hevo Data provides a no-code data pipeline platform to integrate data from various sources. Its simple setup makes it a good choice for small and medium-sized companies that want to improve their data operations without much IT help.

Hevo Data Pros:

- Fast Setup: Lets companies start to integrate data right away, with an interface that doesn’t need coding cutting out complex setup steps.

- Can Grow with Small to Medium-Sized Businesses: Hevo can handle more data as a company gets bigger.

- Data Streams in Real Time: Gives companies up-to-date insights, which matters a lot for fast-growing startups.

Hevo Data Cons:

- Higher Costs as Data Grows: When businesses need to integrate more data, Hevo’s prices can become a barrier for some companies.

- Less Flexibility for Power Users: Though easy to use, it might not have the depth needed for more intricate tailored workflows.

Best Fit: Smaller and mid-sized companies wanting a simple, code-free platform to combine data from different sources.

3. Celigo

Celigo has an influence on businesses as an iPaaS (Integration Platform as a Service) solution. It aims to link and automate business processes across various systems. Its ready-made connectors and user-friendly design make it a great choice for small teams with few tech resources.

Celigo Pros:

- Pre-Built Connectors: Offers many connectors enabling small businesses to link popular apps and systems with ease.

- Intuitive Interface: The user-friendly design helps in quick setup and needs little technical know-how.

- Cost-Effective for Small Teams: Gives flexible pricing options that small businesses and startups can afford.

Celigo Cons:

- Limited Customization for Large-Scale Projects: While it suits smaller businesses well, it might not offer the deep tweaking that bigger more complex organizations need.

- Scaling Limitations: As companies expand, they might require a more powerful system to handle larger and more intricate workflows.

Best For: Small companies that want a user-friendly integration tool. It offers enough features to boost efficiency without needing a lot of tech support.

Data Integration Tools Comparison

Picking the right data integration tool depends on several things, like how well it scales, how easy it is to use, and if it works with what you already have.

The table below breaks down the main features of 16 top tools in 2024, so you can see which one fits what your company needs. Whether you run a big corporation or a small startup, this table gives you a quick look to help decision-makers choose the best tool to join up data.

| Tool Name | Best For | Key Features | Ease of Use | Integration Options | Scalability | Customization |

| MuleSoft Anypoint | Large companies handling complex API tasks | Full API lifecycle management, Cloud & On-premise support | Requires technical expertise | Cloud, On-premise | High | High |

| Informatica PowerCenter | Big businesses with extensive data workloads | High scalability, strong metadata management | Requires technical expertise | Cloud, On-premise | High | Moderate |

| IBM InfoSphere DataStage | Enterprises with real-time, high-volume needs | Parallel processing, real-time data integration | Requires technical expertise | On-premise | High | Moderate |

| Oracle Data Integrator (ODI) | Oracle-based companies | ELT architecture, strong data quality governance | Requires technical expertise | Cloud, On-premise | High | High |

| Alooma | Google Cloud users needing real-time analytics | Real-time data streaming, scalability | User-friendly | Cloud | High | Limited |

| Talend | Medium-sized companies with growing data needs | Open-source, ETL, API services | Requires technical expertise | Cloud, Hybrid | Moderate | High |

| SnapLogic | Mid-sized businesses needing simple automation | Real-time & batch processing, large connector library | User-friendly | Cloud | High | Limited |

| Boomi (Dell Boomi) | Medium-sized companies connecting cloud & local apps | Low-code automation, scalability | User-friendly | Cloud, On-premise | High | Moderate |

| Jitterbit | Medium-sized businesses with flexible workflows | Quick integration setup, templates | User-friendly | Cloud, On-premise | Moderate | Limited |

Apache NiFi | Large companies needing real-time automation | Real-time data flow automation, scalable | Requires technical expertise | On-premise | High | High |

| Airbyte | Small to medium businesses needing custom pipelines | Customizable integrations, open-source | User-friendly | Cloud, On-premise | Moderate | High |

| Pentaho | Skilled users handling complex transformations | Advanced ETL, data warehousing, built-in reporting tools | Requires technical expertise | Cloud, On-premise | Moderate | High |

| Fivetran | Companies seeking automated pipelines | Pre-built connectors, automated sync | User-friendly | Cloud | High | Limited |

| Tray.io | Startups needing low-code automation | Low-code workflows, cost-effective | User-friendly | Cloud | Limited | Limited |

| Hevo Data | Startups and SMEs needing simple integrations | No-code platform, real-time data streaming | User-friendly | Cloud | Moderate | Limited |

| Celigo | Small businesses needing user-friendly iPaaS | Pre-built connectors, cost-effective | User-friendly | Cloud | Limited | Limited |

How to Pick the Right Data Integration Tool for Your Business: What We Suggest

Picking the right data integration tool begins with knowing what your business needs and what key features aligns with these needs.

Smaller companies often want tools that are cheap and simple to use, while bigger firms should look for tools that can grow with them and handle more complex data.

Connectivity is key—ensure the tool connects to your necessary data sources through ready-made or custom connectors.

Beyond that, data quality and governance are just as vital, including data profiling and tracking where data comes from to keep it intact.

In addition, scalability is essential to grow in the future letting your company expand without costly upgrades.

Next, consider how you plan to integrate data—do you need batch processing, real-time streaming, or a mix of cloud and in-house systems? Making sure it works well with your current setup is key.

It’s also important to factor in maintenance costs, both upfront and over time, to make sure the tool fits your budget in the long run.

For teams of all sizes, it’s important to find a tool with an intuitive and simple-to-use interface. Features like drag-and-drop options, batch processing, and real-time streaming help simplify complex tasks.

Furthermore, tools with data validation ensure everything is accurate and reliable, making the integration process smoother.

If you have a big team, you might need a tool that works well across different departments.

Finally, before making a full commitment, it’s always smart to test the tool with your own data. This ensures it can handle your needs and integrates well with the systems you have in place.

Conclusion

In today’s busy business scene, data integration tools play a key role in staying ahead. These tools can change how you work, whether you’re a big company dealing with tricky data tasks or a small firm aiming to smooth out your methods. They boost data accuracy, cut down on manual work, and give quick insights, which helps make better choices and boosts productivity.

As the field grows more businesses are using AI-powered, no-code options that make data integration easier to handle. These new trends don’t just make things simpler; they also help teams make smart decisions quicker and better.

At DistantJob, we know that picking the right data integration tool is part of the challenge. To make the most of these tools, you need a capable committed team to set them up and run them smoothly. This is where we shine. We focus on finding the best remote tech experts who can create solutions that match your company’s specific requirements. Want to take your operations to the next level? Get in touch with us now and find out how we can help you form a team that brings success.