Keras vs PyTorch. Two powerful Deep Learning frameworks. They have one goal: building and deploying AI and Machine learning models. They provide essential components like pre-built libraries, optimized computations, and GPU acceleration, eliminating the need to code neural networks from scratch.

These frameworks streamline model design and training, foster collaboration, and accelerate deployment and scalability. Keras and PyTorch were built to enable systems to learn from data, identify patterns, and make intelligent decisions without explicit programming.

PyTorch and Keras are the most prominent and popular deep learning frameworks. Selecting the appropriate framework is a crucial decision for your AI software, impacting your prototyping capabilities, performance, and time to market.

Here, we will check the differences between the two, and in the end, you will be able to choose which one you need!

What is Keras?

Keras is an open-source high-level neural network API, designed to be user-friendly, modular, and extensible. Powered by TensorFlow (a low-level back-end), Keras supports multiple backends and platforms for deep learning models. Its design allows easy experimentation with different model architectures.

In short, Keras allows easy and quick building of AI/ML models.

It was initially developed independently and could run on top of backends like TensorFlow, Theano, or CNTK. Since 2019, it has been the official high-level API of TensorFlow (TensorFlow 2.0+). Keras offers high-level APIs (Sequential and Functional) and built-in support for common layers, optimizers, and loss functions.

Keras 3

A significant recent development is Keras 3. It is a full rewrite enabling Keras workflows to run on top of multiple backends. They include: JAX, TensorFlow, OpenVINO (only for inference), and, surprisingly enough, PyTorch itself!

This allows you to select or change a backend that best fits your needs or performs best for a specific model. Keras 3 now introduces a backend agnostic “keras.ops” namespace for developing a custom component, regardless of framework.

This version of Keras also allows for different data pipelines (tf.data.Dataset, PyTorch DataLoader, NumPy, Pandas), no matter what backend is being used. It has a new stateless API with functional programming and a distribution API for large-scale data and model parallelism (currently, only for JAX). In this spirit, Keras 3 maintains backward compatibility with Keras 2, and many existing “tf.keras” models can run as-is, or with minor modifications.

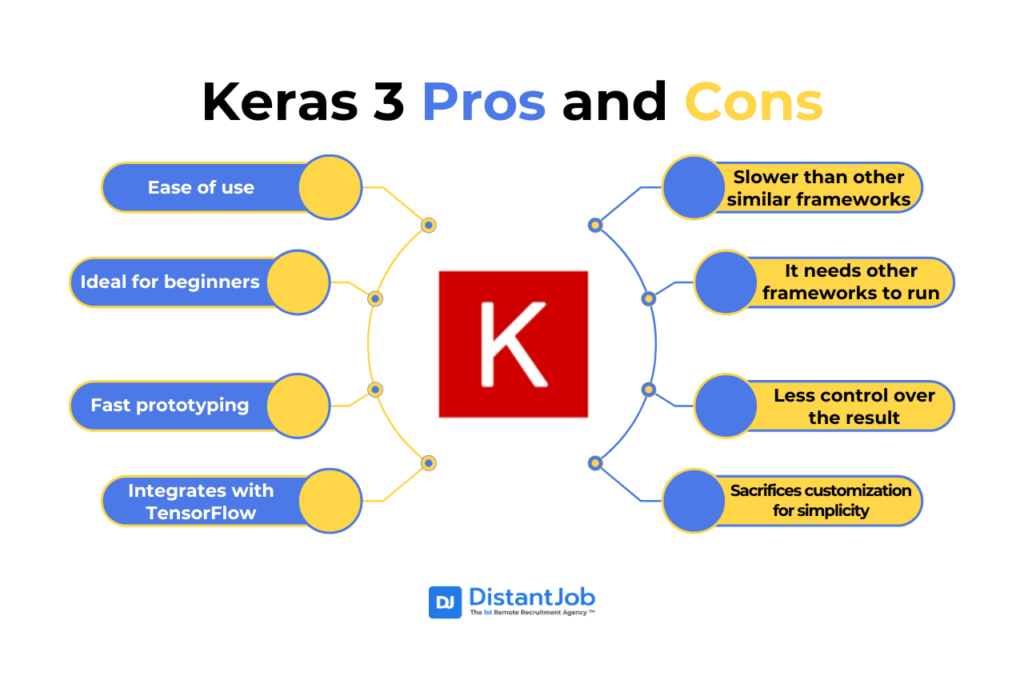

Pros of Keras

Keras is known for being easy to use, making it ideal for beginners and fast prototyping. Its high-level API reduces cognitive load. It also benefits from a large community and extensive documentation, largely inherited from the TensorFlow ecosystem. It integrates well with TensorFlow’s production-ready capabilities when used as “tf.keras”. Keras 3 significantly enhances flexibility and allows leveraging the strengths of different backends. Keras 3 benchmarks show it is consistently faster than Keras 2 across various models.

- Ease of use

- Ideal for beginners

- Fast prototyping

- Integrates with TensorFlow

Cons of Keras

However, due to its high-level abstraction, Keras (particularly before Keras 3) can be slower for small models. Keras sends your code to the back-end, so using TensorFlow or PyTorch directly makes it perform faster. Using Keras also offers less control over low-level details. Debugging complex models can be more challenging as it relies on the backend’s tools. Deployment robustness is limited, depending on the chosen backend’s infrastructure.

- Slower than other similar frameworks

- It needs other frameworks to run

- Less control over the result

- Sacrifices customization for simplicity

When to Use

Keras is best suited for rapid prototyping, educational purposes, and small to medium-scale projects. It is commonly used for standard deep learning tasks like image classification, sentiment analysis, and basic NLP models.

- Prototypes

- Learn how an AI/ML model works

- Small or medium projects

What is PyTorch?

PyTorch is an open-source machine learning library first released in 2016, developed by Meta AI Research Lab. Built on the Torch library, it provides Python and C++ interfaces. PyTorch has dynamic computation graphs (eager execution), which allow for flexibility and real-time modifications to the network architecture. Its design is considered more “Pythonic”, aligning well with standard Python programming conventions.

PyTorch excels in flexibility, particularly for research and custom architectures. It has a steeper learning curve, but it relies on a dynamic computation graph and lower-level control. It has a rapidly growing community, particularly in research circles, and a rich ecosystem of domain-specific libraries like TorchVision and TorchAudio. PyTorch’s production capabilities are improving with tools like TorchServe and TorchScript.

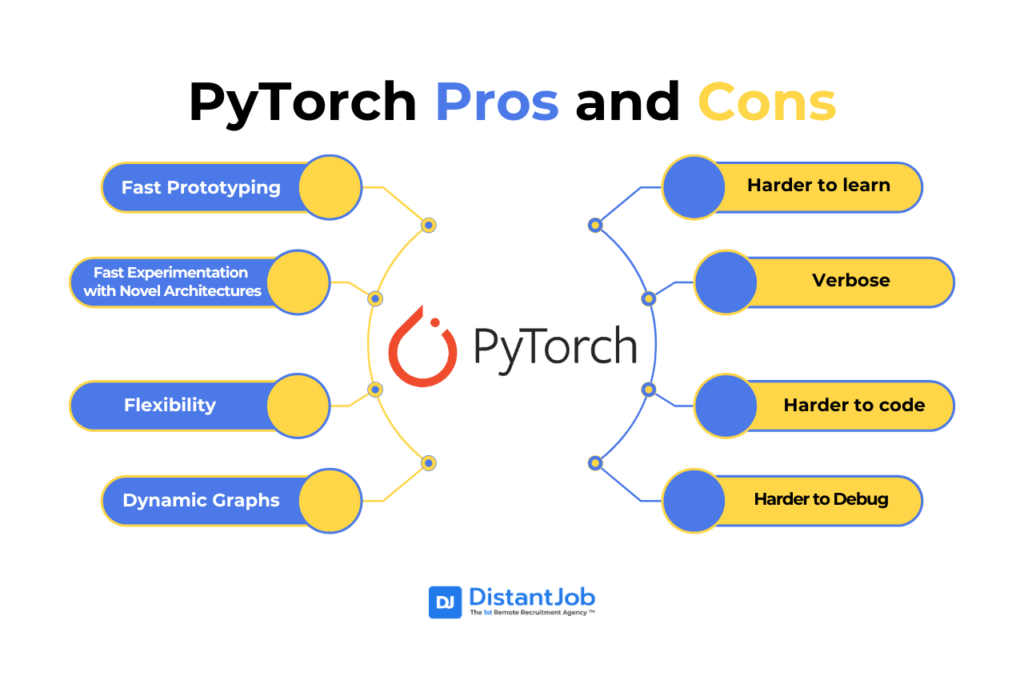

Pros of PyTorch

Highly popular in academic research, PyTorch has flexibility, dynamic graphs, and ease of use, especially for Python users. It also enables rapid prototyping and experimentation with novel architectures.

Its eager execution simplifies debugging using standard Python tools. PyTorch offers strong GPU acceleration via CUDA.

- Fast Prototyping

- Fast Experimentation with Novel Architectures

- Flexibility

- Dynamic Graphs

Cons of PyTorch

PyTorch has a steeper learning curve than Keras, being more low-level and requiring more code for basic tasks compared to Keras’ high-level, user-friendly API. This can result in a steeper learning curve and more verbose code. PyTorch often requires manual implementation of training loops, loss functions, and optimization processes, whereas Keras abstracts these details. Debugging can also be more challenging with PyTorch due to its lower-level nature and dynamic computational graph, in comparison with Keras’ simpler static graphs.

- Harder to learn

- Verbose

- Harder to code

- Harder to Debug

Performance and Speed

As you can notice in the Keras vs PyTorch graph below, Keras is usually slower for small models. For large-scale models, it performs way better, thanks to TensorFlow’s optimized backend. Meanwhile, PyTorch is way faster for smaller models and research settings. Its dynamic computation graphs lead to more efficient memory usage.

However, everything depends on the workload and YMMV. For many image-classification or CNN tasks, tf.keras (especially Keras 3/TF) will tie or beat PyTorch, whereas for NLP/LLM workloads, PyTorch is typically faster out-of-the-box.

In both frameworks, performance can often be improved via compiler flags (XLA, TorchScript) and hardware choices (Tensor Cores, TensorRT). Decision-makers should test their specific models: Keras 3’s multi-backend means you can try TF, JAX, or PyTorch easily to see which is fastest for your task.

Ecosystem and Community

Both ecosystems now support the leading models. Keras (via TensorFlow) has invested in unified libraries. Let’s compare Keras vs PyTorch communities.

Keras Ecosystem

Google’s new KerasHub bundles both NLP and CV models in one place. Its KerasCV/KerasNLP components supply state-of-the-art vision and language architectures, and KerasHub makes them easy to access. Notably, popular new models like Meta’s Llama 3, Google’s Gemma, Stable Diffusion, and Segment Anything have Keras implementations. The Hugging Face team even shows Llama 3.2 running in Keras via their “keras_hub” package. In practice, any model from the Hugging Face model hub (TF or PyTorch checkpoint) can be loaded into Keras and run on the chosen backend.

TensorFlow (thus Keras) benefits from Google’s backing and a very large user base. It has extensive documentation, tutorials, and industry adoption. By many measures, TensorFlow’s community is larger and more enterprise-oriented. Keras gains this community indirectly – it’s part of the TensorFlow ecosystem. As one analysis notes, “Keras benefits from TensorFlow’s vast community… its ecosystem includes pre-trained models and integrations… but its reliance on TensorFlow limits its standalone presence.”. For Keras users, this means lots of support (TensorBoard, TF-Hub, TFX pipelines) but fewer Keras-specific forums beyond the TF community.

PyTorch Community

PyTorch’s ecosystem is equally rich and very research-focused. Core libraries include TorchVision (CV), TorchText/TorchAudio (NLP/audio), TorchRec (recommendation), and domain projects (e.g., PyTorch Geometric for graphs). The Hugging Face Transformers library (Python) is predominantly PyTorch-based, and nearly all new cutting-edge LLMs and other SOTA models appear first with PyTorch code. Tools like PyTorch Lightning, Accelergy, and DeepSpeed further simplify large-scale training. In short, PyTorch has a rich ecosystem of tools and libraries, especially for research.

PyTorch’s community has been growing explosively in academia and research. Meta’s sponsorship and open governance attract many contributors. Forums like PyTorch Discuss and conferences (PyTorch DevCon) fuel activity. As of 2025, PyTorch is often cited as the “framework of choice” for researchers and students due to its Pythonic design. Though its industry user count is smaller than TensorFlow’s, it’s rising rapidly – companies like Tesla, Meta, OpenAI, and others use PyTorch heavily. In summary, both communities are active.

In short, Keras/TF has more enterprise-backed resources and educational reach, while PyTorch has a vibrant, research-centric community.

Deployment and Integration

TensorFlow/Keras remains the “production workhorse” with broad platform support. Its mechanisms for mobile (TFLite), microcontrollers, and browsers are especially advanced. PyTorch has closed much of the gap: it now exports to ONNX, supports mobile through PyTorch Mobile/ExecuTorch, and has service frameworks (TorchServe, albeit with limited maintenance). Ecosystem is often a decision factor. For example, if you need TFLite or TF Serving specifically, Keras is a natural choice, while if you have an existing PyTorch pipeline or use AWS AI services, PyTorch is more convenient.

Deployment on Keras

Keras integrates with TensorFlow Serving for scalable model deployment. Keras’s strength in deployment and production readiness is primarily derived from its deep integration with TensorFlow, particularly since becoming its official high-level API. While Keras is renowned for its simplicity and rapid prototyping capabilities, which accelerate the development process leading to deployment, its robustness for large-scale and complex production environments largely relies on the underlying TensorFlow ecosystem.

TensorFlow has long had the edge in production tooling. It offers mature services like TensorFlow Serving, TFX pipelines (TensorFlow Extended), and comprehensive model monitoring/management. It also supports specialized hardware (TPUs) on Google Cloud. TensorFlow Lite (TFLite) provides highly optimized inference on mobile/edge devices, and TensorFlow.js enables in-browser models. The Keras 3 announcement explicitly notes that any Keras model can be exported as a TensorFlow SavedModel to use “the full range of TensorFlow deployment & production tools (like TF-Serving, TF.js and TFLite)”. In practice, deploying a Keras model to production often means using the TensorFlow stack (perhaps via Docker on Kubernetes or GCP services).

Deployment on PyTorch

PyTorch’s production story has improved, but is more fragmented. TorchScript lets you compile a PyTorch model into an optimized form, and TorchServe provides a container-based model server. However, TorchServe’s official documentation now warns it’s “no longer actively maintained”. That said, many enterprises still use it (AWS SageMaker has built-in support for TorchServe endpoints).

PyTorch also offers PyTorch Mobile and PyTorch Edge runtimes for iOS/Android/embedded. In 2024, Meta introduced ExecuTorch – a new PyTorch runtime optimized for a wide range of mobile/edge hardware. ExecuTorch (part of PyTorch Edge) aims to run vision, speech, and generative models on-device with low latency, drawing on partnerships with Arm, Apple, Qualcomm, etc.. Importantly, Google announced AI Edge Torch in 2024: a tool to run PyTorch models using the TensorFlow Lite runtime. This means PyTorch models can now leverage the TFLite ecosystem for mobile deployment. Finally, ONNX continues to be a bridge: both TensorFlow and PyTorch models can convert to ONNX for cross-platform deployment on runtimes like ONNX Runtime or NVIDIA TensorRT.

Deployment on Cloud

Both frameworks run on all major clouds. TensorFlow has native TPU support on Google Cloud and optimized Docker images. PyTorch is first-class on AWS (SageMaker, EC2 DL AMIs) and Azure (PyTorch on Azure ML). All clouds support GPU/CPU for both.

In practice, TensorFlow’s tooling (TFX, TensorBoard, TFLite) is often easier for large-scale production pipelines, whereas PyTorch leverages cloud services via containers or TorchServe. (For example, AWS has many PyTorch examples on SageMaker, and Google Cloud announced support for PyTorch on TPUv5.)

Keras vs PyTorch Comparative Summary

The table below compares key aspects of Keras vs PyTorch:

| Aspect | Keras (TensorFlow) | PyTorch |

| Primary model style | Declarative/static graph (tf.keras) | Imperative/dynamic graph |

| Ease of use | Very user-friendly, high-level API | Pythonic, more code, but flexible |

| Performance | High (especially CNNs/TPU) | High (especially RNNs/Transformers) |

| Large models (LLMs) | Supported via KerasNLP/KerasHub (Gemma, Llama etc) | Widely used (Hugging Face + PyTorch) |

| Ecosystem | Full stack (TF Hub, TFX, TensorBoard, TFLite, TF.js) | Rich research libraries (TorchVision, Transformers, Lightning) |

| Community | Massive (Google-backed, enterprise) | Rapidly growing (research & academia) |

| Deployment | TF Serving, TFLite, K8s/TensorFlow Extended | TorchScript, ONNX, PyTorch Mobile, (ExecuTorch) |

| Multi-backend support | Native only TF (but Keras 3 adds JAX/PyTorch) | Core is PyTorch (now also interoperable via TorchDynamo/TorchScript) |

| Typical use cases | Rapid prototyping, production/mobile apps, CV/NLP pipelines | Research/R&D, custom architectures, LLM/RL experiments |

Recommendations

Based on everything already said, I would suggest you:

Rapid Prototyping & Ease-of-Use: Keras (TensorFlow)

Its high-level API and extensive tutorials accelerate development. Teams new to DL or focused on standard tasks (image classification, basic NLP, sentiment analysis, etc.) can iterate quickly with Keras. Keras 3’s improvements make it both easy and fast.

Large-Scale Production & Mobile/Edge: TensorFlow/Keras

If you need robust deployment (TF Serving, TFLite, TFX) or intend to leverage Google’s cloud/TPUs/mobile optimizations, Keras/TF is a strong choice. It excels at scaling to large data and serving models globally. For on-device AI, TensorFlow Lite is more mature (though PyTorch’s ExecuTorch and AI Edge Torch are catching up).

Cutting-Edge Research & Custom Models: PyTorch

Its dynamic graph and native support for advanced features (mixed precision, distributed training, custom loss functions) make it ideal for R&D, experimental architectures, and LLM work. Hugging Face Transformers and PyTorch Lightning integrate deeply here. For research teams pushing new ideas (new neural net types, RL, generative models, etc.), PyTorch’s flexibility is a boon.

Mixed or Cross-Platform Needs: Keras 3 (multi-backend)

If you want one codebase to target both ecosystems, Keras 3 is unique. You can train with JAX for TPU performance, serve with TF Serving, or export to a PyTorch module for use with PyTorch tools. This “write once, run anywhere” approach can be valuable for open-source model releases.

NLP/LLMs

As of 2025, PyTorch still dominates the LLM landscape (ChatGPT, Llama, etc. were built on it). However, Keras now supports those models too. If existing Hugging Face/PyTorch pipelines are used, continuing with PyTorch is simplest. If you prefer TensorFlow or want unified tooling, KerasNLP and TFX can handle large language models (for instance, Gemma fine-tuning via TFX is demonstrated by Google).

In all cases, measure performance on your workloads. These frameworks continue to evolve: update to the latest versions (Keras 3.x, PyTorch 2.x) to take advantage of compiler improvements.

Conclusion

Ultimately, many teams find that both frameworks can coexist: use Keras/TensorFlow for production pipelines and PyTorch for R&D, or leverage Keras 3 to bridge the gap. The best choice between Keras vs PyTorch is the one that aligns with your team’s skills, the project’s requirements, and the surrounding infrastructure.

Just in case you don’t know which framework suits you best, we’re here to help you make that decision. Our team can guide you through the technical considerations and connect you with experienced remote developers who are skilled in either Keras or PyTorch. Whether you need someone for a short-term project or a long-term remote position, we can match you with the right talent to bring your vision to life