You’re probably familiar with the ongoing debate about code quality metrics in software engineering, about their effectiveness, interpretation, and impact on your development practices. While high-quality code matters, you might wonder what metrics you should use and how to apply them.

Some studies suggest a strong correlation between certain code metrics and positive outcomes like maintainability, while others find conflicting or inconclusive results. Two studies, one from a partnership between two Brazilian Universities and another published in the Empirical Software Engineering journal, have pointed out this contradiction.

This leads to questions about the validity and reliability of those metrics. So, what are the code quality metrics about? Are they useful? And how to ensure code quality?

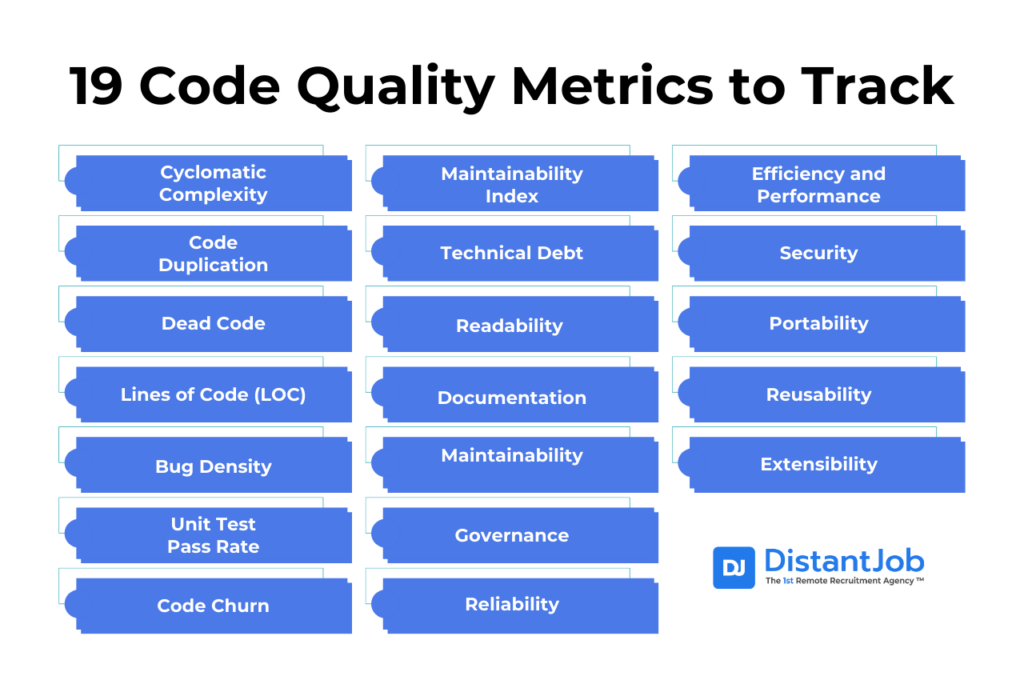

19 Code Quality Metrics to Track

Code quality metrics measure how well code is written, structured, and reliable, encompassing aspects such as readability, maintainability, efficiency, reliability, and security.

Development teams measure code quality by combining both quantitative and qualitative code quality metrics. Quantitative data helps track progress over time, identify risks, and set objective benchmarks. Qualitative assessments, often through manual code reviews, help uncover complex issues, ensure adherence to coding standards, and facilitate knowledge sharing.

Quantitative Metrics

Quantitative data measures the risk of bad code quality in a single code. They are numerical measurements that can be automatically calculated by tools, focusing on the “what” and “how many” aspects of code. You’ll find them useful for tracking trends over time, setting performance goals for your team, and identifying specific areas that need your attention.

1. Cyclomatic Complexity

Cyclomatic complexity measures the logic deviation by counting the number of linearly independent paths through the code. It focuses on the number of decision points (like if statements, for loops, while loops, or case statements in switch) in a program.

The higher this metric, the more complex your code becomes. You should aim for lower cyclomatic complexity, with many experts suggesting you keep it around 10 or below as a threshold. When you achieve lower complexity, your code becomes more readable, easier for you to modify, and arguably less prone to bugs.

When to use the Cyclomatic Complexity metric: When your code is under international safety standards like ISO 26262, which enforces low code complexity.

The risk of the Cyclomatic Complexity metric: To improve the metric, one might divide the code into many smaller snippets. However, if the division isn’t done logically and coherently, the result is an even harder-to-read code, since its logic will be scattered across many functions. The metric improved, but the readability worsened.

2. Code Duplication

Code Duplication tracks instances of identical or nearly identical code. Reducing duplication leads to easier maintenance, less risk of inconsistent changes, and more modular, reusable code, therefore, reducing bugs.

When to use the Code Duplication metric: Duplications increase the chance of errors and bugs. It also makes it harder to maintain the code. The ideal is having the minimum possible, but not always zero.

The risk of the Code Duplication metric: Let’s say a program has two functions: one to calculate product_discount and another to calculate service_discount. To reduce the code duplication metric, a developer unifies both in a single generic method. A few months later, the business logic changes, and the discount on products and services changes. Now the generic method must be modified once again, which risks breaking the business logic. The code was considered “cleaner” according to the Code Duplication metric; however, the coupling increased, making the code more vulnerable to maintenance issues.

3. Dead Code

The Dead Code metric refers to code portions no longer in use or relevant. Its removal improves code quality, reduces maintenance time, and enhances readability, as dead code increases codebase size, hinders navigation, and can harbor outdated logic or vulnerabilities. The ideal dead code rate is 0%.

When to use the Dead Code metric: Code that is not being executed is dead weight. Always clean up Dead Code.

The risk of the Dead Code metric: A verification tool might mistakenly detect a vital part of the application as dead code, leading your team to erase it. For example, if a feature flag protects that snippet.

4. Lines of Code (LOC)

A smaller code is usually less complex and more concise. The Lines of Code are a straightforward metric counting total lines in a project, used to gauge size. However, it doesn’t directly reflect quality. While a higher LOC might indicate complexity, it’s best used with other metrics for a fuller picture.

When to use the Lines of Code metric: Fewer lines of code indicate simpler and concise solutions.

The risk of the Lines of Code metric: Reducing the number of methods and ternary operators in a single line of code, or compressing multiple instructions in a single declaration. The LOC metric improves, but the code became impossible to read, understand, or debug for both the team and the programmer. The code didn’t simplify; it became dense and unreadable.

5. Bug Density

Bug Density measures the number of bugs per unit of code size (usually per 1,000 lines). High bug density suggests quality or stability issues, helping prioritize sections for refactoring or testing.

When to use the Bug Density metric: Whenever you wish to deploy code.

The risk of the Bug Density metric: Fewer bugs do not always translate into safe code. You might have a code with a higher quantity of bugs on the user interface that will be annoying, but otherwise, harmless. In the same way, a code might have fewer bugs, but one of them is a critical security flaw that compromises end-user data.

6. Unit Test Pass Rate

Unit Test Pass Rate measures the percentage of unit tests that pass in a test suite, indicating code stability and reliability. A high pass rate suggests stable code, while lower rates highlight bugs or instability in recent changes. The goal is usually 80%.

When to use Unit Test Pass Rate: When testing key algorithms for which there is a clear “third-party” success criterion and demonstrable business value. You also use it as a debugging tool and as an assertion of the correct behavior of events. Unit tests can provide good feedback to developers during development.

The risk of Unit Test Pass Rate: A developer, under pressure, might write tests that simply execute code lines but do no actual verification to ensure the result is correct. The rate rises, but the security of the code remains low, as tests don’t detect failures. Plus, there is the risk of over-engineering tests on low-risk snippets, or chunks of code that lack business value.

7. Code Churn

The Code Churn metric measures additions and modifications to code over time, indicating codebase stability or volatility. High churn can imply poor design or unclear requirements.

When to use the Code Churn metric: High Code Churn might indicate code instability, signaling potential problems with the architecture or that the team doesn’t understand the functionality.

The risk of the Code Churn metric: Sometimes, code needs an overall rewrite. While smaller pull requests are advisable, when you re-engineer code to make it more scalable and functional, the code is actually becoming better, while the code churn metric rises dramatically.

8. Maintainability Index

The maintainability index assesses how easy code is to maintain on a scale of 0-100, considering factors like extensibility, LOC, and complexity metrics. A higher value (above 85) indicates better maintainability.

When to use the maintainability index: It’s beneficial to use the maintainability index during code reviews, refactoring, and to track changes in code quality over time. It helps identify areas that may be difficult to maintain, understand, or modify due to complexity or other factors.

The risk of the maintainability index: Refactoring a snippet of code that is working well and stable, because the maintainability index ranked it high, might be a waste of time. As a rule of thumb, don’t fix something that isn’t broken and generates no value by doing so.

9. Technical Debt

Technical debt is an estimate of the time and costs to fix all quality problems in a code base. Unmanaged technical debt slows development, increases bug risk, and reduces scalability. It’s also known as software entropy and software rot. Unsurprisingly, a high technical debt is bad.

When to use the technical debt metric: Accumulated technical debt can harm development speed, so it must be addressed to prevent damage to the development of new features.

The risk of the technical debt metric: The metric doesn’t differentiate between “strategic debt” from “negligible debt”. In other words, if the technical debt concentrates only in areas that don’t harm the business or the product, addressing it is a waste of time and work. Focusing exclusively on the technical debt can paralyze development and stop a company from reacting to the market.

Qualitative Metrics

Qualitative metrics involve more subjective assessments, but are the goal of quantitative metrics. They capture design intent and real-world readability, although they can be biased or inconsistent without clear guidelines. Qualitative metrics focus on the “why” and “how” of code, often requiring human judgment, experience, and review. These metrics provide a more nuanced understanding of code quality.

10. Readability

Code shouldn’t be hard to read at all. Readability measures how easily a developer can understand the code. It encompasses clear naming conventions, proper indentation, and comprehensive documentation. High readability simplifies maintenance and debugging.

How to evaluate readability: Code review is the most important way to evaluate it. A human reviewer is the best way to judge code clarity if the variable names and functions are self-descriptive and if the code logic is easy to follow.

Quantitative metrics support: Cyclomatic Complexity and Code Duplication strongly indicate whether the code is readable or not.

11. Documentation

Documentation ensures retention of system knowledge. Good documentation is appraised by its existence, clarity, and usefulness.

How to evaluate documentation: A team can evaluate documentation through manual review. The documentation might be in the code, a wiki, or a README. Proper documentation must be complete, up-to-date, and understandable. Linting tools can verify documentation in functions or public classes.

Quantitative metrics support: There is no direct metric for “good documentation”. However, Unit Tests can be employed to ensure the code follows the documentation.

12. Maintainability

Maintainability is crucial to the success of any software project in the long term. Not only to fix bugs, but also to add new functionalities. You can achieve it by ensuring all other requirements of a clean code; maintainability is the final result.

How to evaluate maintainability: Take note of the time and effort needed to correct bugs, add new functions, or integrate with other systems. If the team takes days to understand the code for just a small alteration, the degree of maintainability is low.

Quantitative metrics support: Maintainability Index tries to quantify code quality through lines of code, its complexity, and extensibility. However, it’s an indicator, not a data-driven decision. You can also investigate Code Churn in stable areas of the code.

13. Governance

Governance encompasses all quality standards, processes, and policies followed by the team.

How to evaluate governance: Check your code review policies and compare them with the code in production. New code should only merge with the main branch after passing all tests and static analysis.

Quantitative metrics support: Instead of using a metric, use a linter tool.

14. Reliability

Reliability is the probability that software will work as intended under normal operation conditions. Reliable code minimizes crashes and unexpected behavior, fostering user trust.

How to evaluate reliability: Monitoring and alerting tools measure failure rates, latency, and activity time. Error logs provide insightful information.

Quantitative metrics support: Test coverage is a strong predictor of reliability. A high test coverage (with quality tests) means the code was extensively verified. Bug density also monitors reliability. Again, interpret these metrics with caution.

15. Efficiency and Performance

Efficiency and Performance are closely linked. They measure how fast the software performs its tasks and the amount of resources it consumes. Unlike other qualities, they are directly measurable. Efficient code enhances user experience by ensuring smooth, quick application responses.

How to evaluate efficiency and performance: They are evaluated through performance tests and monitoring in real time.

Quantitative metrics support: The usual for any software application: latency, throughput, CPU, Memory, and Disk usage.

16. Security

Security is the resistance of the code against malicious cyber threats. It can’t be measured only by metrics; it needs to be supported by good security practices.

How to evaluate security: Among the many practices to evaluate security in software, we can highlight vulnerability analysis and penetration tests.

Quantitative metrics support: Some of the metrics that can be used for security are: SAST (that searches for vulnerability patterns in code, like SQL injections and cross-site scripting); DAST (searches for vulnerabilities during execution), security test coverage, and security patch rate (the frequency and regularity with which security updates are applied to systems within a company).

17. Portability

Portability is software’s capacity to function in different environments (PC, mobile, cloud, etc.) with minimal modification. Portable code avoids platform-specific dependencies. When that’s not possible, it’s needed to create an uncoupled layer of abstraction, splitting the code into two: a part of the application dedicated to platform portability and the main part of the application.

How to evaluate portability: Run the software in different environments.

Quantitative metrics support: The same as efficiency and performance. You can use tools like Docker to test different environments without different devices.

18. Reusability

Reusability measures a code’s capability to be used multiple times in the same code without the need to rewrite it from scratch, reducing duplication and speeding up development.

How to evaluate reusability: Check independent modules or libraries that are used by other projects or by other parts of the same code base. Reusable code is often in custom libraries for the project. A code that doesn’t depend on other dependencies and does its function well is reusable.

Quantitative metrics support: Code duplication might point out opportunities to generate reusable code.

19. Extensibility

Extensibility is the capability of a code to receive new features without modification to the existing code. It allows adaptation to changing needs.

How to evaluate extensibility: One can feel this quality by coding in a project over time. An extensible system allows new resources to be added without minimal effort, since the code foresees extensible points.

Quantitative metrics support: No code quality metrics can be used to measure extensibility. However, you can measure it by looking at certain metrics as “Time to Provision” (development metric) and “Time to Deploy” (deployment metric). They reflect code extensibility by measuring the time from initiating the resource creation process to its fully functional state. You can also check the use of a design pattern in code; design patterns lead to ease in adding new resources.

Tools for Measurement

You have access to various tools that can help you measure both quantitative and qualitative code quality metrics effectively. Traditional Static Analysis Tools like SonarQube, ESLint, and Pylint examine code without running it to identify errors, enforce coding standards, and assess overall quality.

If you’re looking for more advanced capabilities, modern AI code review platforms like CodeAnt.ai can provide you with real-time feedback, vulnerability analysis, and auto-fix suggestions. These tools streamline your review process and help you maintain consistency across your codebase.

You can also leverage other specialized tools to get a complete picture of your code quality: IDE plugins, code coverage tools (e.g., JaCoCo, Istanbul), performance monitoring tools, and version control system integrations. These tools centralize your data and provide you with dashboards that help you track and interpret metrics effectively, enabling your team to continuously improve your code quality over time.

Criticism of Code Quality Metrics

As you can see, code quality metrics can help us to make the code more readable, but we can’t rely on a single metric. The problem is not the metrics themselves, but how to use them.

To avoid bad code quality due to code quality metrics, we must integrate them into a broader culture of code quality. The code quality metrics are not KPIs, and they do not end the discussion, but start it.

Instead of looking into a high cyclomatic complexity score and saying “this complexity is too high, please fix it”, the best practice would be “it signals a high level of complexity in the code; let’s review together”.

Always combine code quality metrics with code review. Humans are the best way to evaluate solutions and check their readability, maintainability, and elegance.

How to Actually Write Quality Code?

There are several good practices for writing quality code. The best, may I say, is remembering that other programmers will read your code, so even following good practices, they might not apply to every case.

DRY

DRY means “Don’t Repeat Yourself”. It’s a practice that cautions against code duplication. Code duplication leads to contradiction. A software that contradicts itself will malfunction.

YAGNI

YAGNI means “You Ain’t Gonna Need It”. Don’t add new functionalities for the future. The amount of dead code won’t be worth it — code for today’s needs.

KISS

KISS means “Keep it simple, stupid”. Instead of writing complex solutions that only you understand, prefer simple ones. Keep in mind that other programmers will visit your code. Keep the job simple for them.

SOLID

Robert C. Martin, in his book “Agile Software Development”, introduced the SOLID principles, an acronym created by Michael Feathers. These principles guide best practices when utilizing the Object-Oriented Paradigm, a widely accepted industry standard for writing code. They include:

Single Responsibility

A class, method, or component should have a single responsibility. The way to determine that responsibility is by ensuring there is only one reason to change it. When an object has many responsibilities, it’s more likely to be modified. The more often code is changed, the higher the risk of introducing errors.

Open/Closed

Classes should be open for extensions, closed for modifications. If you want to extend a previous functionality, create new subclasses. Don’t modify previous code, just add to it.

Liskov Substitution

A subclass should be able to use every single attribute and method of the class by inheritance. For example, if you have a Bird class that has a method called fly(), you can’t add a subclass Penguin into it. Instead, you should create a subclass FlyingBirds to add any future flying birds.

Interface Segregation

Interfaces here have nothing to do with the User Interface. Interfaces are the declaration of the methods used in classes. They form a contract between the function and the class. The Interface Segregation principle supports the creation of small, but coherent and concise interfaces. A fat interface is hard to debug and to modify.

Dependency Inversion

Dependency Inversion advocates for decoupled objects. High-level classes (that contain business logic) can’t depend on lower-level classes (which contain implementations) and vice-versa. While connected, if they depend on each other, if one fails, the other fails as well. Instead, they must depend on abstractions (just as interfaces).

GRASP

General Responsibility Assignment Software Patterns (GRASP) are a set of guidelines for assigning responsibilities to classes and objects in object-oriented design. They help you to create well-structured, maintainable, and understandable code. There are nine core GRASP principles. Here’s a breakdown of the nine GRASP principles:

1. Information Expert: Assign responsibilities to the class that has the information necessary to fulfill them.

2. Creator: Determine which class should be responsible for creating new instances of other classes, often the class that aggregates or contains the other class.

3. Low Coupling: Minimize dependencies between classes to reduce the impact of changes and improve maintainability.

4. Controller: Assign a controller class to handle user interface events or system events.

5. High Cohesion: Keep related responsibilities together in a single class to make it more understandable and manageable.

6. Indirection: Introduce an intermediary object to decouple two classes, reducing their direct dependency.

7. Polymorphism: Use polymorphism to handle variations in behavior based on object type.

8. Protected Variations: Design the system to protect parts of the system from changes in other parts by encapsulating points of variation.

9. Pure Fabrication: Create a class to fulfill a responsibility that doesn’t naturally belong to any existing class, often a utility or helper class.

Conclusion

There is no magic formula to write clean code. Not even data can drive your decisions on writing code. Code quality metrics are a tool, not a deterministic sign of bad code. Clean code is more about sensibility than anything else. Writing code isn’t manual labor, nor is software development a factory.

Robert Martin, in his book “Clean Code”, compares clean code with martial arts. Developers have their own schools of thought, just as martial artists have their specific martial arts. While they all differ in their visions of what clean code really means, the goal is the same: producing code quality to make programming easier and run applications smoothly.